Today, many companies are integrating GenAI-based assistants and agents into their products to enhance operational efficiency. However, not all assistants and agents deliver the same value. Building an effective assistant and agent that can truly support operations requires the right instrumentation, data foundation and a model architecture. This blog explores ng-voice’s approach to developing a GenAI-based assistant and initial set of agents tailored for Voice over LTE (VoLTE) and Voice over New Radio (VoNR) operations. It outlines the architecture, shares key implementation learnings, and highlights how closed-loop automation models, when combined with predictive capabilities and GenAI can enable better useability towards building autonomous operations model for mobile voice services.

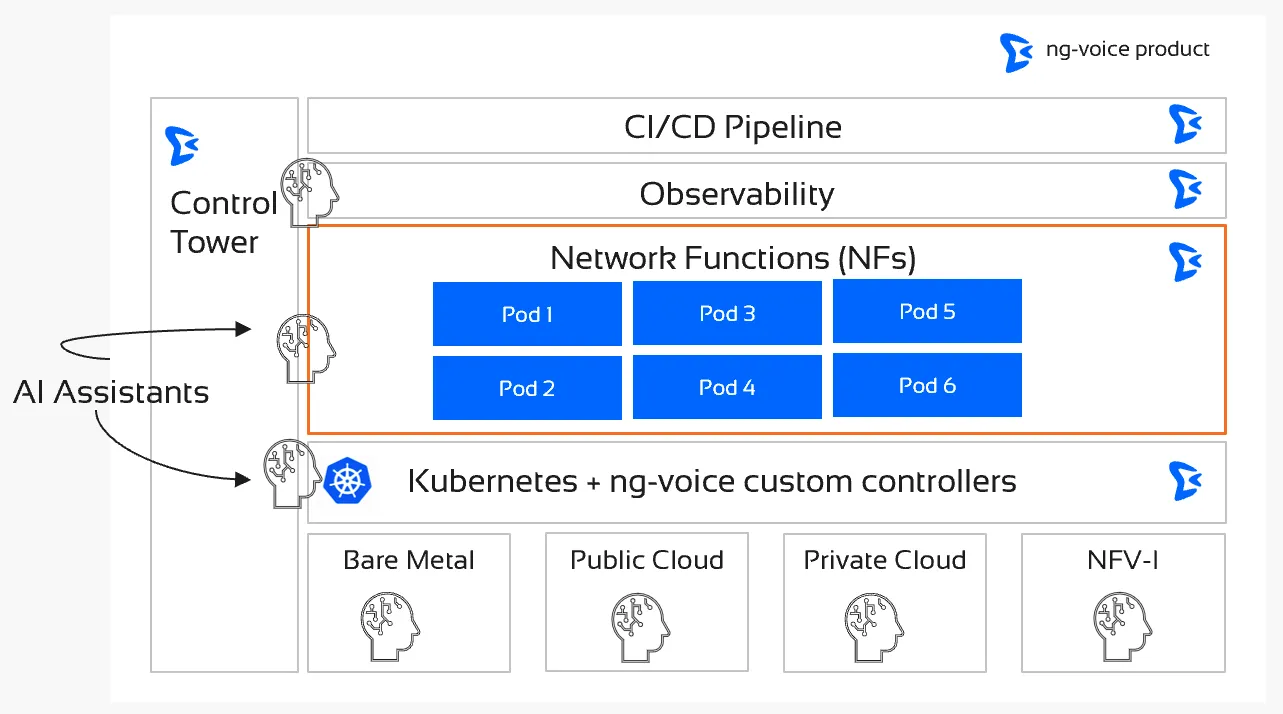

ng-voice is developing a comprehensive framework of GenAI based assistants and agents that operate across various layers of the cloud stack to streamline and enhance operations. At the core of this approach is an assistant that interfaces directly with the IP Multimedia Subsystem (IMS) components. However, the scope goes far beyond a single assistant. In the overall data-center architecture, multiple assistants and agents are deployed at different levels that cover the public cloud layer, observability tools, operational processes, and specific network functions.

When considering network functions such as IMS and packet core, which together enable voice services across the infrastructure, the need arises for a broader set of network assistants and agents. These agents play specific roles, including troubleshooting and lifecycle management of individual components. Each of these agents operates independently but within a coordinated cloud native framework that is fully API driven and microservices based.

These assistants and agents call APIs in real time to retrieve relevant information and feed it into a processing pipeline. A simple way to build an assistant/agent is use a RASA framework with a basic natural language understanding model (NLU), which enables the assistant/agent to interpret inputs and context. This foundation is then extended using large language models (LLMs) to deliver more advanced and context-aware responses. Multiple bot frameworks are available to support these use cases, including Watson AI, Microsoft Bot Framework, Github Co-pilot, Amazon Lex, and RASA. Among these, ng-voice chose RASA to drive the first iteration of assistant/agent. RASA is an open source framework to build a simple, yet powerful assistant architecture, enabling full control, customization, and integration within the existing cloud environment.

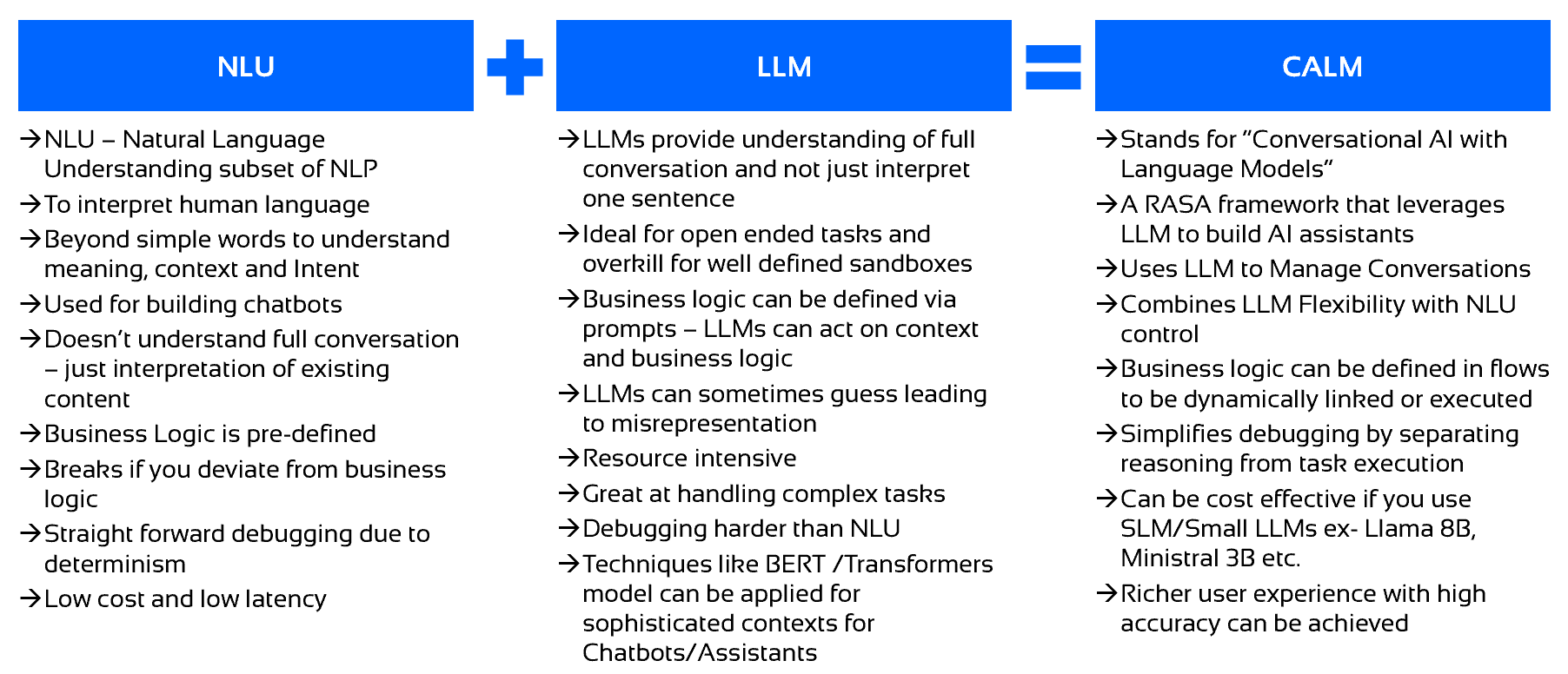

RASA framework allows you to combine the assistant/agent built using NLU, with an LLM to create a Conversational AI with Language Models(CALM). CALM is a hybrid approach that blends the structured precision of an NLU with the flexible reasoning power of LLMs. This combination enables richer, more context-aware interactions while maintaining control over logic execution. Here is a breakdown of how we moved from a basic NLU to a full CALM-based assistant/agent.

We began with a basic NLU (subset of NLP- Natural Language Processing) model. NLU enables the system to understand the syntax and semantics of natural language sentences to interpret individual lines. It goes beyond simple word recognition, parsing sentences and capturing small amounts of context. These models are particularly easy to implement when building chatbots.

However, an NLU operates in a sandboxed environment and does not comprehend the full flow of a conversation. It’s context window is small and hence cannot recall interactions from several steps earlier, which limits its ability to generate meaningful or contextually rich responses. This limitation may or may not matter depending on the use case, but the approach is notable for its simplicity since there is no predefined business logic. If the conversation deviates from the defined logic, the model breaks. That said, it remains easy to debug, extremely low cost, and very efficient in terms of latency, making it a solid starting point.

To enhance the assistant’s capabilities, an LLM can be added to the NLU model. The LLM introduces conversational abilities with larger context windows, enabling the assistant to handle open ended tasks. It can incorporate business logic dynamically, apply a degree of guesswork, and provide richer context. While this increases flexibility, it also introduces challenges since LLMs are resource intensive even for inferencing and more difficult to debug than NLU models. They are, however, highly effective in managing complex tasks. Using transformer techniques such as BERT (Bidirectional Encoder Representations from Transformers), the assistant can be made even more advanced.

CALM combines the strengths of both approaches. By integrating NLU and LLM within the Rasa framework, CALM provides the structure and precision of NLU with the flexibility and contextual understanding of LLMs. This architecture allows for dynamic business logic, manages conversations intelligently, and simplifies debugging by separating reasoning from task execution. It also supports cost effectiveness when smaller language models such as Llama 8B or Mistral 3B are used. This is the main reason ng-voice selected the RASA framework: it enabled the team to quickly implement a robust and scalable assistant/agent model.

At ng-voice, the assistant/agent implementation began with a focus on speed and determinism using the RASA framework.

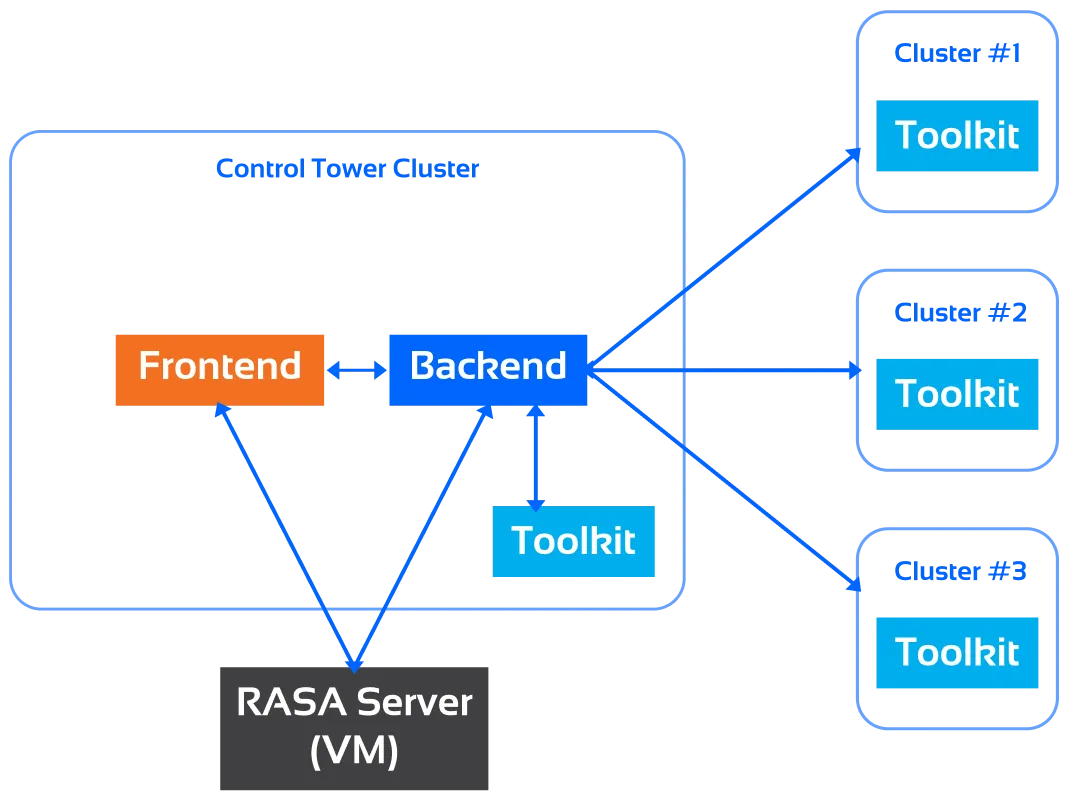

ng-voice started with a lightweight architecture: a simple frontend where users could input queries, backed by a backend where all the core logic and decision-making takes place. The backend interacts with a custom-built toolkit which includes a set of APIs and scripts tied to our network functions; and applies this toolkit across Kubernetes-based clusters.

At the center of this system is the RASA server, deployed on a VM (Virtual Machine) or machine outside the Control Tower’s main components but within the same cluster. When a user enters a query in the frontend, it gets routed to the RASA server, which uses an NLU model to interpret the intent. The backend then invokes the toolkit to execute the appropriate action. Since it’s all API-driven and sandboxed, the system is extremely fast and easy to integrate into our existing element management system, the Control Tower, making deployment and iteration possible within a single day.

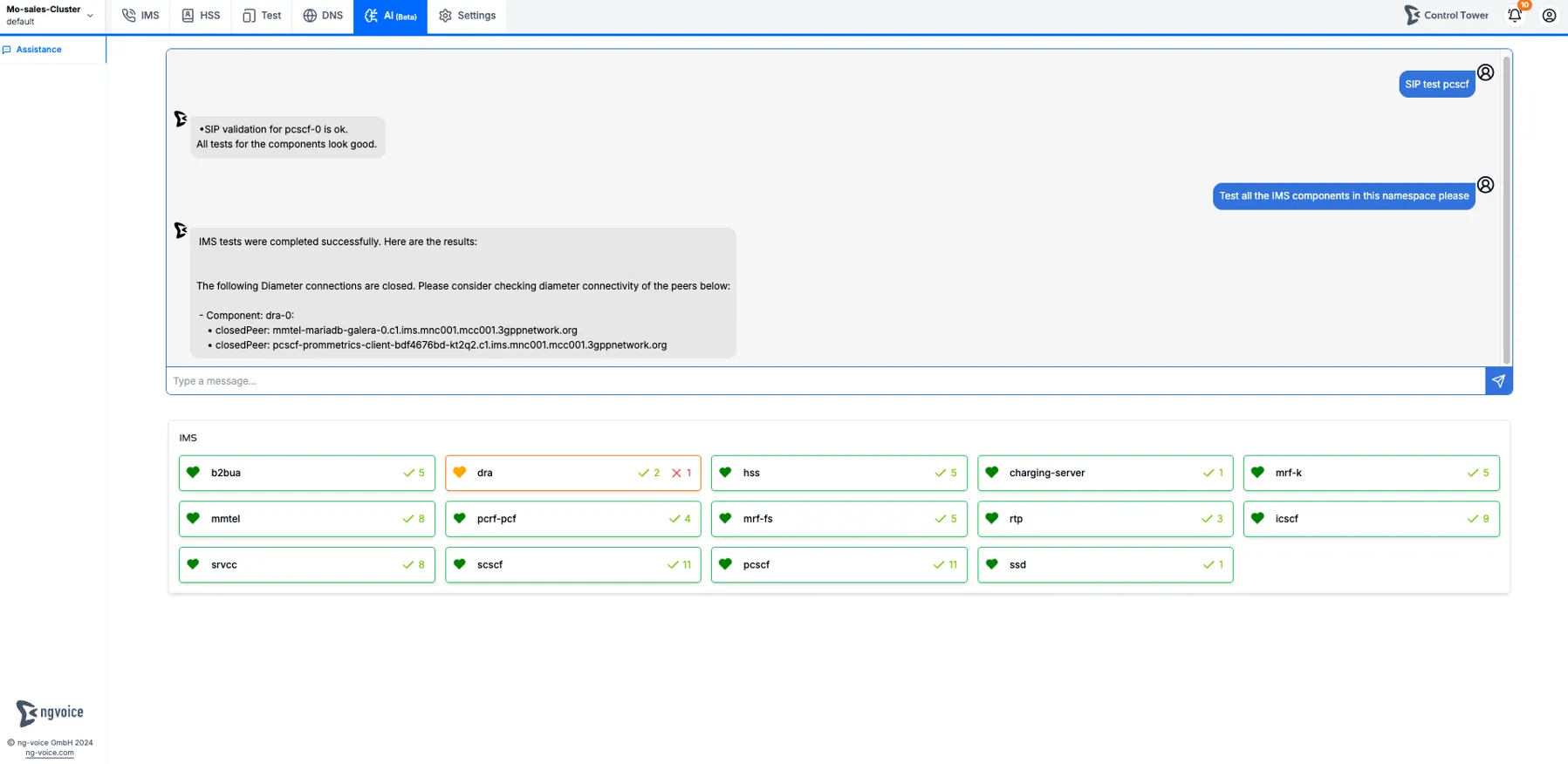

The next step was enhancing the assistant’s flexibility and conversational capability. What you see in the image below is the evolved system where the assistant can now respond to more natural and varied queries like “Test all the IMS components in this namespace” and return live diagnostic insights.

Because it’s still powered by NLU underneath, users don’t need to memorize rigid command structures. The assistant can understand input phrased in different ways. With an LLM integrated into the backend using RASA Pro, ng-voice is able to add richer context awareness, handle broader open-ended prompts, and maintain stateful conversations. The agent retrieves information from real-time API calls, processes logic, and presents results like component status, failed tests, or closed Diameter connections - all within the same chat interface. This transition marks the shift to a CALM-based model that blends the precision of NLU with the reasoning ability of LLMs.

The agent can be embedded within the Control Tower software as shown in Fig 4 to provide a seamless operational model that aids the deployment or NOC engineer to configure, monitor and troubleshoot the IMS cluster including the underlying cloud stack.

Cloudification and automation are foundational to enabling AI-driven operations in telecom. When introducing AI for AIOps, it’s essential to start with frameworks that align with your goals, whether simple assistants/agents built using GenAI NLU or more advanced models leveraging LLMs within CALM-based architectures. Starting small and incrementally enhancing your capabilities isa pragmatic approach, especially given the layered complexity of telco operations.

Voice services, such as those implemented through ng-voice IMS, present a focused domain where AI agent can be effectively demonstrated. However, achieving an intelligent autonomous infrastructure requires addressing broader coordination challenges including the orchestration and communication of multiple AI agents working across different domains.

As we move into 2025, Agentic AI capable of iterative reasoning, planning and autonomous action emerges as the defining challenge and opportunity. It represents the next evolution beyond predictive and generative AI. The path forward will require collaboration, experimentation, and shared problem-solving to truly realize the vision of Telecom AIOps powered by intelligent, distributed and autonomous agents.

Traditional automation relies on static rules and scripts. GenAI-based assistants bring adaptability, conversational interfaces, and predictive capabilities that help operators troubleshoot, configure, and optimize IMS and packet core networks more efficiently.

CALM (Conversational AI with Language Models) combines the precision of Natural Language Understanding (NLU) with the reasoning power of Large Language Models (LLMs). In telecom, this means assistants can execute deterministic commands while still managing open-ended, context-rich conversations which are essential for complex IMS and VoLTE/VoNR environments.

LLMs can be resource intensive, but frameworks like RASA allow operators to balance NLU precision with LLM flexibility. By using smaller models, assistants can stay cost-effective while still supporting advanced use cases.

Yes. Assistants and agents can be deployed at multiple layers from public cloud infrastructure to observability tools to specific network functions like IMS. This ensures operators can monitor, troubleshoot, and manage services consistently across their environment.

The current step is combining automation with conversational AI for faster, more intuitive operations. The long-term vision is Agentic AI which consists of distributed, autonomous agents that collaborate across domains, enabling self-healing and self-optimizing networks.

By interfacing directly with IMS components, GenAI assistants can execute live diagnostics, monitor component health, and suggest corrective actions in real time — reducing mean time to resolution and improving service reliability.

ng-voice’s cloud-native IMS is flexible, fully containerized, and API-driven — making it the ideal foundation for GenAI-based assistants. Our approach integrates seamlessly with Kubernetes-based operations, allowing AI agents to interact directly with IMS and packet core functions in real time.

While chatbots typically follow predefined rules, GenAI assistants combine NLU with LLMs. This hybrid model enables them to interpret intent, recall context, and interact dynamically with real-time network data.

By using this website, you agree to the storing of cookies on your device to enhance site navigation, analyze site usage, and assist in our marketing efforts. View our Privacy Policy for more information.