In this blog, we will examine the benefits of microservices in telecoms when it comes to implementing IP Multimedia Subsystem (IMS) software. We will explore why a cloud-native IMS is a must-have to ensure elasticity, availability and hyper scalability. We will also take a look at how an IP Multimedia Subsystem, one which offers cloud-native microservices such as the one developed by ng-voice, can really harness the benefits of a small resource footprint and automation to deliver significant cost savings over time.

The network software which supports IP-based services developed historically as a monolith with functions such as user session information, state handling, packet analysis and processing – all tightly bundled together in one large codebase.

Instead of accessing data from a single source, each component would typically store its own copy of user and state information. Handling tasks, such as tracking and storing user data or IP session management such as IMS SIP call registration or call flow, would cause the size and complexity of such a system to constantly grow over time. This resulted in one ever-expanding binary executable that could quickly become unwieldy.

Until recently, most network function software was written as a custom application on custom hardware with some embedded OS as the underlying platform. Performance optimization would be done for each environment on an individual basis and, whenever new features were added, the whole system would have to be re-tuned.

With the adoption of COTS hardware, this network function software moved to a virtualized platform using either OpenStack or VMWare vSphere. As part of the IMS virtualization process, the software was minimally refactored – some hardware dependencies were removed to make it work with a hypervisor but its core functionality and state retention or process/thread management remained largely intact.

Virtualizing the IMS offered some benefits such as the ability to quickly save and restore a system in the event of failure or to easily run multiple instances of the same service. But these advantages were limited in scope and sometimes came with unintended consequences. For example, while the ability to deploy multiple instances worked well, it also consumed double the resources. Since the resource management was less efficient, sometimes, more resources are needed to run virtualized software than dedicated custom hardware. It was also difficult to replicate the operational environment of physical devices in this new world of virtual IMS.

In order to fully capture the benefits of a cloud model, network function software needed to be refactored further to run in containers reusing components and making the architecture more stateless. This way it could be deployed more efficiently than virtual machines with optimized resource footprints in public or private clouds.

Again, when refactoring their virtualised applications, many vendors took the shortcut of removing VM dependencies and building large container images. These were not true microservices but rather monoliths slotted into containers. So, while these containers could be deployed in the cloud, they are not optimized for this new use and are unable to deliver the benefits of cloud with respect to scalability, availability and elasticity.

How Proxy CSCF (P-CSCF) and Serving CSCF (S-CSCF) handle SIP dialogues and transactions to establish, maintain and terminate a session is one example. Each SIP dialogue is a peer-to-peer relationship between the subscriber’s device and another end point such as a user agent or SIP server. There may be multiple transactions per dialogue. All the processing logic and the state associated with these SIP transactions and dialogues, such as IMS SIP registration, needs to be stored and replicated in each component, leading to an inefficient implementation of software for cloud usage if we are using the monoliths or VM based implementations.

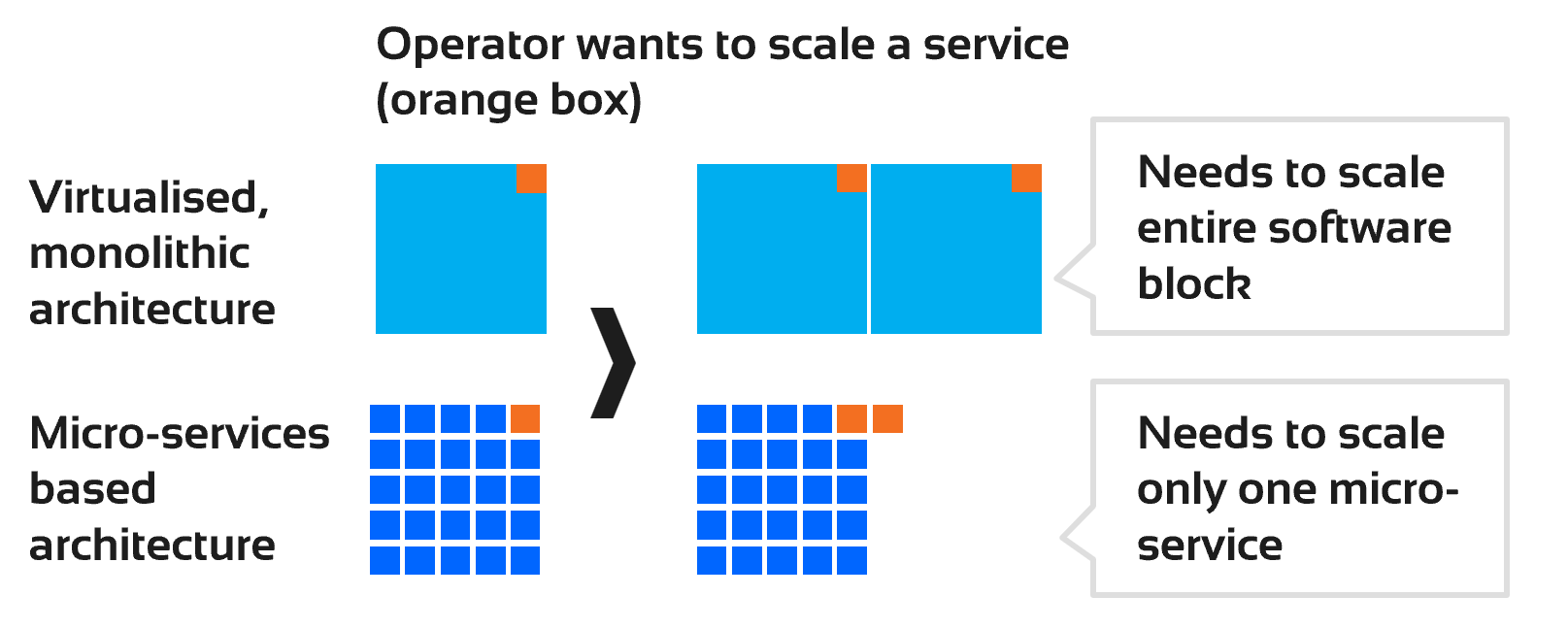

What is more, if one service is impacted, it cannot be scaled individually. Many vendors ship their software as cloud-enabled or cloud-ready. However, they have not refactored their applications into true microservices but have instead merely put container wrappers around their virtualized monoliths.

Students of computer science will be familiar with the bin packing algorithm where a number of bins needs to be stored inside a basket. The larger the bins, the harder it is to efficiently fit them in, which leaves plenty of space unused. We can apply this analogy to IMS containers and virtual machines. If an application is a truly cloud-native IMS based on microservices, then the containers running those microservices will be much smaller than virtual machines. This means we can pack those containers into the basket – or in this case the server – much more efficiently than with virtual machines.

Each microservice is independent and API-driven so it can be stopped and started again with minimal impact on the other components. This contrasts with a monolithic application where the entire application must be stopped each time thereby impacting traffic.

Microservices can be stitched into a mesh for building different services or data paths that carry signaling or packets. They can be replicated to provide redundancy and load sharing on those internal data paths for better traffic handling. This is key in how we can create replica sets and let Kubernetes manage the desired state of the microservices to optimize handling control or data plane traffic.

Applying techniques such as ‘make before break’ can significantly increase availability. This is when new software is enabled first, traffic moved to this microservice and then the old microservice is taken out of service.

Since microservices, by definition, have small footprints, new instances can be launched on demand in an elastic manner. Kubernetes can manage the state of any microservices already running to match the desired state request by the operator. Automation allows that automatic restarts, scaling up or shrinking sets of microservices according to demand with minimal changes in the infrastructure.

Microservices can be automated at a highly granular level making them extremely efficient at reporting and monitoring events logs and metrics. Web 2.0 architectural principles can be applied to network functions by separating the core network function behaviors from the common reporting and management behavior of the components.

The architecture of microservices in telecoms also allows easy implementation of CI/CD/CT. Since the code is segmented and API-driven, it is a lot easier to manage versions, do continuous development, integrations and testing to keep software up-to-date.

Designed for the cloud and built using a microservices-based architecture, ng-voice’s Hyperscale IMS provides a good illustration of how this approach can be both flexible and resource-efficient. This means relying on smaller sizes of IMS containers – 15-25 MB for each component – leads to a lower resource footprint.

Using containers and microservices also makes for much faster start-up times – typically well under a minute as compared to 5 minutes or more for legacy systems, depending on vendors and equipment configuration.

Upgrades and updates are carried out in an automated manner, eliminating manual steps and reducing the risk of error. This automation makes testing, deployment and rollback faster. Upgrades which previously took hours can now be done in a matter of minutes because you are only patching or upgrading the microservice as opposed to the entire system.

Building automation deep into ng-voice’s IMS also has benefits for self-healing and autoscaling. Kubernetes acts as the brain of the system and keeps microservices running smoothly inside their containers. It will restart or replace them automatically if they fail, reducing the time needed for recovery to seconds compared to minutes for traditional systems. It can also respond to peaks and signalling storms by dynamically scaling in real-time.

A smaller footprint allows resources to be used more efficiently and this can give telecoms companies significant cost savings.

According to a recent analysis by BCG and AWS, a telco with 10 million subscribers could see its total cost of ownership decrease by 60% within 5 years if it migrates its IMS to a cloud-native architecture and deploys it on a public cloud. This would include potential savings of 40% on product costs, 70% on platform costs and 80% on operations costs.

The ng-voice Hyperscale IMS makes good use of the cloud-native principles of state management, microservice architecture, dependency management, concurrency, configuration management, admin/user space management and disposability. It offers the full range of microservices delivered in secure containers that evolve independently and can be lifecycle managed independently. ng-voice adheres to full CI/CD/CT practices with fully automated deployment and continuous testing of deployed components so that no silent failures occur.

All of this is why today several telecom carriers are already using ng-voice’s cloud-native IMS on-premises or in the public cloud to light up their VoLTE and VoNR services, from design to live service, in record time.

Monolithic IMS platforms bundle all functions together, making them heavy, rigid, and resource-intensive. Microservices break these functions into independent components, allowing operators to scale only what’s needed and drastically reduce waste.

Microservices allow each IMS function to scale individually instead of scaling the whole system. This ensures operators can handle traffic peaks efficiently without overprovisioning resources.

Microservices avoid idle capacity by allocating resources dynamically to only the active workloads. This makes the IMS far more lightweight and cost-efficient, especially compared to legacy architectures that must run at fixed capacity.

Yes. For large telcos, microservices deliver hyperscale performance, and for smaller operators or MVNOs, they allow running IMS on a minimal footprint without sacrificing capability.

Legacy IMS systems often suffer from slow updates, high resource overhead, and limited fault isolation. Microservices eliminate these constraints by decoupling every function and enabling granular control.

ng-voice’s IMS uses ultra-light containers and stateless microservices designed specifically for cloud environments, making it far more efficient than repackaged legacy stacks. This gives operators high performance with a fraction of the CPU, memory, and operational complexity.

If one microservice fails, it can restart independently without affecting the rest of the IMS. This fault isolation ensures higher availability and more predictable service continuity.

Yes. By scaling precisely to demand and minimizing idle compute, microservices significantly cut infrastructure and operational costs compared to fixed, monolithic IMS architectures.

By using this website, you agree to the storing of cookies on your device to enhance site navigation, analyze site usage, and assist in our marketing efforts. View our Privacy Policy for more information.